ANNSER - A Neural Network Simulator for Education and Research

I've just launched ANNSER on GitHub.

ANNSER stands for A Neural Network Simulator for Education and Research.

It is licensed under the MIT license, so it can be used for both Open Source and Commercial projects.

ANNSER is just a GitHub skeleton at the moment. I have some unreleased code which I will be committing over the next few days.

I'm hoping that ANNSER will eventually offer the same set of features as established ANN libraries like TensorFlow, Caffe and Torch, and I would like to see a GUI interface to the ANNSER DSL.

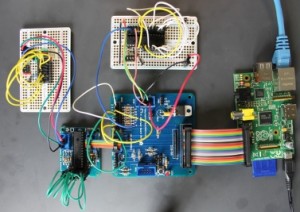

ANNSER will be implemented in Dyalog APL. The GUI will probably be implemented in JavaScript and run in a browser. All the code will run on the Raspberry Pi family, though you will be able to use other platforms if you wish.

There's a huge amount of work to complete the project but we should have a useful Iteration 1 within a few weeks.

vec ← 0.01×?4⍴100

sn ← {÷1+*-⍺+.×⍵}

mat sn vec

I know which I prefer :)

ANNSER stands for A Neural Network Simulator for Education and Research.

It is licensed under the MIT license, so it can be used for both Open Source and Commercial projects.

ANNSER is just a GitHub skeleton at the moment. I have some unreleased code which I will be committing over the next few days.

I'm hoping that ANNSER will eventually offer the same set of features as established ANN libraries like TensorFlow, Caffe and Torch, and I would like to see a GUI interface to the ANNSER DSL.

ANNSER will be implemented in Dyalog APL. The GUI will probably be implemented in JavaScript and run in a browser. All the code will run on the Raspberry Pi family, though you will be able to use other platforms if you wish.

There's a huge amount of work to complete the project but we should have a useful Iteration 1 within a few weeks.

Why APL?

I have several reasons for choosing APL as the main implementation language.- It's my favourite language. I love Python, and I've used it since the last millennium, but I find APL more expressive, performant and productive.

- With APL you can run serious networks on the $5 Raspberry Pi zero. This makes it very attractive for educational users.

- APL was created as a language for exposition.

- APL is unrivalled in its handling of arrays, and ANN algorithms are naturally expressed as operations on arrays.

Python version

import random from math import exp def random_vector(cols): return list([random.random() for i in range(cols)]) def random_vov(rows, cols): return list([random_vector(cols) for j in range(rows)]) def dot_product(v1, v2): return sum((a*b) for (a,b) in zip(v1, v2)) def inner_product(vov, v2): return list([dot_product(v1, v2) for v1 in vov]) def sigmoid(x): return 1.0/(1.0+exp(-x)) def sigmoid_neuron(vov, v2): return list([sigmoid(x) for x in inner_product(vov, v2)]) mat = random_vov(3, 4) vec = random_vector(4) print sigmoid_neuron(mat, vec)

numpy version

from numpy.ma import exp from numpy.random import random from numpy import array, inner def random_vector(cols): return array([random() for i in range(cols)]) def random_mat(rows, cols): return array([random_vector(cols) for j in range(rows)]) def sigmoid(m, v): return 1,0+1.0/(1.0+exp(-inner(m,v))) mat = random_mat(300, 400) vec = random_vector(400) s = sigmoid(mat, vec)

APL

mat ← 0.01×?3 4⍴100vec ← 0.01×?4⍴100

sn ← {÷1+*-⍺+.×⍵}

mat sn vec

I know which I prefer :)

Comments

Post a Comment