Get rid of Magic Numbers from your code

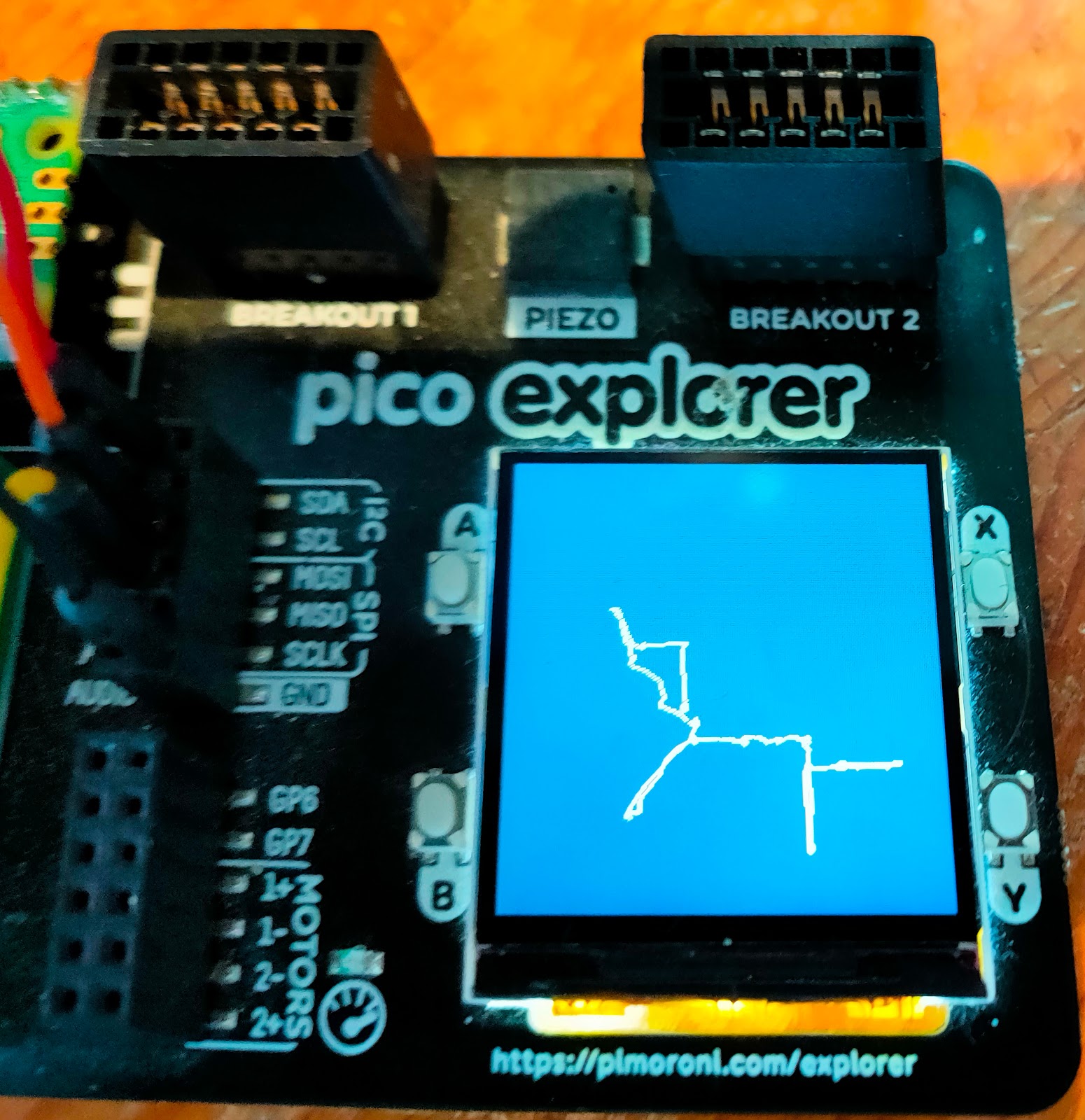

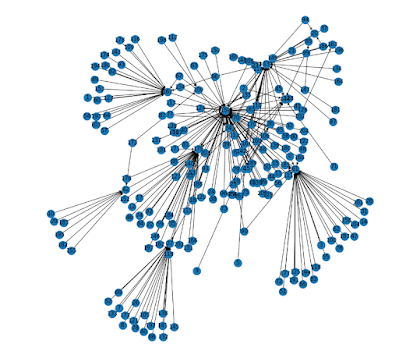

Last November I got really perplexed. I was looking at some code for the Pimoroni Explorer Base. I love that hardware. It comes with lots of useful peripherals and plenty of sample code to show you how to control them. One of the examples is called noise. It plays a simple tune on the Explorer Base Piezo buzzer, and shows the rise and fall of the notes on the built-in display. You tell the program what notes to play by creating a list like this: tones = [ "AS6" , "A6" , "AS6" , "P" , "AS5" , "P" , "AS5" , "P" , "F6" , "DS6" , "D6" , "F6" , "AS6" ,] The code is easy to follow, but one thing really puzzled me. The program turns each note into a frequency by looking up the name of the note in tones , a massive dictionary with names as keys and integer frequencies as values. I could read the code, but I was still puzzled. Where did all those frequencies