Pimoroni Explorer Hat Tricks

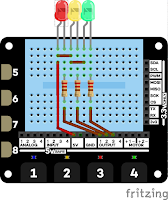

Yesterday I talked about my plans for the Pimoroni Explorer HAT. I've started the GitHub repository for the code from Explorer Hat Tricks. That's the ebook I'm writing for users of the Explorer HAT, HAT Pro and pHAT. At present there are just a few examples and a single project, but I only started yesterday :) I'll post here and tweet ( @rareblog ) as more code becomes available. Leanpub I'm developing the ebook on Leanpub , a publishing platform. Leanpub is good for authors and great for readers. Readers like it because they get a chance to see a sample free lifetime updates for every book in their library, paid-for or free a 45-day money-back guarantee, and DRM-free content in pdf, mobi and epub formats. Try the code out You'll need a Raspberry Pi with 40-pin header (zero, 2, 3 or 4) running Raspbian Buster a Pimoroni Explorer HAT Pro . Most of the code will also work on the original HAT or pHAT, but some will need the Pro f...