Benchmarking process for TF-TRT, and a workaround for the Coral USB Accelerator

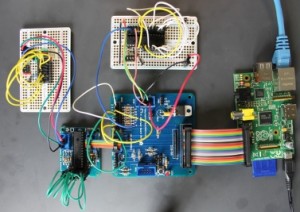

A couple of days ago I published some benchmarking results running a TF-TRT model on the Pi and Jetson Nano. I said I'd write up the benchmarking process. You'll find the details below. The code I used is on GitHub.

I've also managed to get a Coral USB Accelerator running with a Raspberry Pi 4. I encountered a minor problem, and I have explained my simple but very hacky workaround at the end of the post.

The process was based on this excellent article, written by Chengwei Zhang.

I started by following Chengwei Zhang's recipe. I trained the model on my workstation using and then copied trt_graph.pb from my workstation to the Pi 4.

I used a virtual environment created with pipenv, and installed jupyter and pillow.

I downloaded and installed this unofficial wheel.

I tried to run step2.ipynb but encountered an import error. This turned out to be an old TensorFlow bug resurfacing.

The maintainer of the wheel will fix the problem when time permits, but I used a simple workaround.

I used cd `pipenv --venv` to go to the location of the virtual environment, and then ran cd lib/python3.7/site-packages/tensorflow/contrib/ to move to the location of the offending file __init__.py

The problem lines are

I replaced the second line with pass using

sed -i '/from tensorflow.contrib import cloud/s/^/ pass # ' __init__.py

and captured the timings.

Later, I ran raw-mobile-netv2.ipynb to see how long it took to run the training session, and to save the model and frozen graph on the Pi.

I used the Nano that I had configured for my series on Getting Started with the Jetson Nano; it had NVIDIA's TensorFlow, pillow and jupyter lab installed.

I found that I could not load the saved/imported trt_graph.pb file on the Nano.

Since running the original training stage on the Pi did not take as long as I'd expected, I ran step1.ipynb on the Nano and used the locally created trt_graph.pb file which loaded OK.

Then I ran step2.ipynb and captured the timings which I published.

The Coral USB Accelerator comes with very clear installation instructions, but these do not currently work on the Pi 4.

A quick check of the install script revealed a hard-coded check for the Pi 3B or 3B+. Since I don't normally use the Pi 3B, I changed that entry to accept a Pi 4.

When I ran the modified I found a couple of further issues.

The wheel installed in the last step of the script expects you to be using python3.5 rather than the Raspbian Buster default of python3.7.

As a result, I had to (cough)

and change all references in the demo paths from python3.5 to python3.7

With these changes, the USB accelerator works very well. There are plenty of demonstrations provided. In this image it's correctly identified two faces in an image from a workshop I ran at Pi towers a couple of years ago.

It's an impressive piece of hardware. I am particularly interested by the imprinting technique which allows you to add new image recognition capability without retraining the whole of a compiled model.

Imprinting is a specialised form of transfer learning. It was introduced in this paper, and it appears to have a lot of potential. Watch this space!

I've also managed to get a Coral USB Accelerator running with a Raspberry Pi 4. I encountered a minor problem, and I have explained my simple but very hacky workaround at the end of the post.

TensorFlow and TF-TRT benchmarks

Setup

The process was based on this excellent article, written by Chengwei Zhang.

On my workstation

I started by following Chengwei Zhang's recipe. I trained the model on my workstation using and then copied trt_graph.pb from my workstation to the Pi 4.

On the Raspberry Pi 4

I used a virtual environment created with pipenv, and installed jupyter and pillow.

I downloaded and installed this unofficial wheel.

I tried to run step2.ipynb but encountered an import error. This turned out to be an old TensorFlow bug resurfacing.

The maintainer of the wheel will fix the problem when time permits, but I used a simple workaround.

I used cd `pipenv --venv` to go to the location of the virtual environment, and then ran cd lib/python3.7/site-packages/tensorflow/contrib/ to move to the location of the offending file __init__.py

The problem lines are

if os.name != "nt" and platform.machine() != "s390x":These try to import cloud from tensorflow.contrib, which isn't there and fortunately isn't needed :)

from tensorflow.contrib import cloud

I replaced the second line with pass using

sed -i '/from tensorflow.contrib import cloud/s/^/ pass # ' __init__.py

and captured the timings.

Later, I ran raw-mobile-netv2.ipynb to see how long it took to run the training session, and to save the model and frozen graph on the Pi.

On the Jetson Nano

I used the Nano that I had configured for my series on Getting Started with the Jetson Nano; it had NVIDIA's TensorFlow, pillow and jupyter lab installed.

I found that I could not load the saved/imported trt_graph.pb file on the Nano.

Since running the original training stage on the Pi did not take as long as I'd expected, I ran step1.ipynb on the Nano and used the locally created trt_graph.pb file which loaded OK.

Then I ran step2.ipynb and captured the timings which I published.

Using the Coral USB Accelerator with the Raspberry Pi 4

The Coral USB Accelerator comes with very clear installation instructions, but these do not currently work on the Pi 4.

A quick check of the install script revealed a hard-coded check for the Pi 3B or 3B+. Since I don't normally use the Pi 3B, I changed that entry to accept a Pi 4.

When I ran the modified I found a couple of further issues.

The wheel installed in the last step of the script expects you to be using python3.5 rather than the Raspbian Buster default of python3.7.

As a result, I had to (cough)

cd /usr/local/lib/python3.7/dist-packages/edgetpu/swig/

cp _edgetpu_cpp_wrapper.cpython-35m-arm-linux-gnueabihf.so _edgetpu_cpp_wrapper.cpython-37m-arm-linux-gnueabihf.so

and change all references in the demo paths from python3.5 to python3.7

With these changes, the USB accelerator works very well. There are plenty of demonstrations provided. In this image it's correctly identified two faces in an image from a workshop I ran at Pi towers a couple of years ago.

It's an impressive piece of hardware. I am particularly interested by the imprinting technique which allows you to add new image recognition capability without retraining the whole of a compiled model.

Imprinting is a specialised form of transfer learning. It was introduced in this paper, and it appears to have a lot of potential. Watch this space!

Comments

Post a Comment