Baby talk - Audio analysis on the Pi

I'm working on two projects at present.

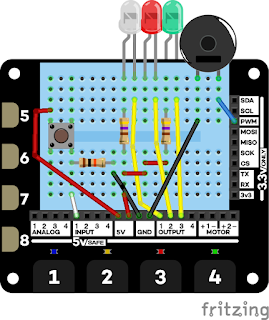

I want to finish my book on the Pimoroni Explorer HAT, and I'm getting close. All of the code examples are available on GitHub, many with documentation, and the book itself is now 70% finished. It's still only $5, but the price goes up this weekend.

I've also started another project - one that I expect to take months or years. It's based on some fascinating work by Jürgen Schmidhuber and his team on a technique they call UDRL - Upside-Down Reinforcement Learning.

It looks as if they have cracked a problem that has been bugging me for decades. How can we get an ANN-based Parent Agent to train a Child Agent without hard-wiring in an unrealistic amount of innate behaviour?

If you're interested in their solution (and have a basic background in reinforcement learning) there are two papers on arxiv that explain it:

Training Agents using Upside-Down Reinforcement Learning and

Reinforcement Learning Upside Down: Don't Predict Rewards -- Just Map Them to Actions.

The papers use game-based learning tasks but I want to explore the idea of teaching a Child agent to speak by getting it to imitate the parent.

Sound analysis

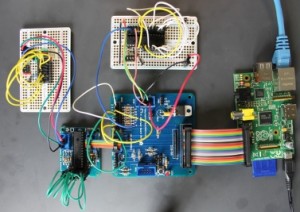

The first step of my project is to generate inputs for an ANN from the sounds that the Child agent hears. I'm lucky to have a friend who is an expert on Hearing; his recommended reading led me to some software on GitHub which analyses sound in much the same way as humans do.The image to the right is the output generated from a short speech sample. It's like the spectrum you'd get from Fourier Analysis, but it's closer to what experts think the human nervous system does.

Comments

Post a Comment