Clusterfun with the Raspbery Pi Clusterhat

Want to build your own supercomputer at home?

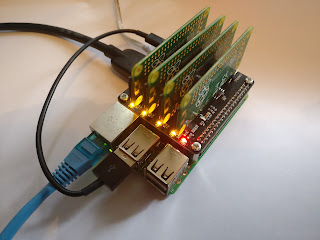

Want to build your own supercomputer at home?You can take a step in that direction with a Raspberry Pi 3, four Pi zeros and a ClusterHat.

The ClusterHat allows you to connect four pi Zeros to a Raspberry Pi controller and network them all together. You've now got 8 cores and 2 Gb of memory to play with - not quite up to Cray computing standards, but enough to explore some modern clustering software.

Avoid these snags!

I've just got my cluster up and running, with four ssh sessions from the controller to the four zeros. The instructions on the ClusterHat website are pretty clear, but I did hit a few minor snags before I got everything working smoothly.

Make sure you have a 2.5 Amp power supply.

The first is entirely my own fault, but don't make the same mistake! I used an old-style power supply which was rated at 2 amps. When I started the fourth zero, the power supply became overlaoded and Bad Things Happened. The controller crashed and corrupted its SD card, so I had to re-flash the image.

I switched to a 2.5 Amp supply, as recommended for the Pi 3, and now everything is fine.

Enable ssh on each image

You'll need to create an empty file called ssh in the boot partition of each SD card in order to be able to log in to each Pi zero once the cluster is running.

If you're running Linux, you can just

touch /media/

or whatever the path is on the machine you're using to prepare the SD cards. Remember to do this for each of the images! If you forget, the zeros will reject attempts to log in via ssh once they have started up, and you will have no safe way of closing them down.

Be wary when you upgrade the software

It's good practice to upgrade the installed software on your Pi after installing a new image. If you do so, take care not to overwrite the custom configuration for dnsmasq that's on the special cluter images.

Here's what to watch out for when you run sudo apt-get upgrade:

apt-get may well find a newer version of the dnsmasq program which is needed to network the Pi zeros to the controlling Pi. Since dnsmasq comes with a default configuration file, you will be asked if you want to retain or overwrite the custom configuration which the ClusterHat needs for its configration. Accept the default answer, which will not overwrite the changes and leave the required custom configuration in place.

Parallel Computing made simple

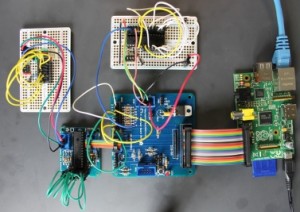

My particular interest is in running Dyalog APL on all five computers in the cluster. APL is a great language for exploratory programming, and it's also capable of high-performance computing. Morten Kromberg and I recently did some quick informal benchmarks and found that APL was up to five times faster than Python's numpy for some matrix operations.

Since I am actively researching Neural Networks, and I build Robots controlled by the Raspberry Pi, I'm interested in anything that can squeeze more performance out of the platform.

Dyalog APL supports two different forms of parallel processing.The first needs no programmer effort; if the APL run-time engine detects that you are carrying out operations on large arrays, it will seamlessly and automatically share the work across multiple processor cores.

The second uses a language feature called Isolates. It allows you to farm off work to a different process, which may be running in a different processor. You can see a fun demonstration of Isolates on Morten Kromber's blog.

Over the next few days Morten and I will be exploring the ClusterHat as a way of sharing computational workload using Isolates. Follow @rareblog and @dyalogapl to see our progress.

Comments

Post a Comment