Training ANNs on the Raspberry Pi 4 and Jetson Nano

There have been several benchmarks published comparing performance of the Raspberry Pi and Jetson Nano. They suggest there is little to chose between them when running Deep Learning tasks.

I'm sure the results have been accurately reported, but I found them surprising.

Don't get me wrong. I love the Pi 4, and am very happy with the two I've been using. The Pi 4 is significantly faster than its predecessors, but...

The Jetson Nano has a powerful GPU that's optimised for many of the operations used by Artificial Neural Networks (ANNs).

I'd expect the Nano to significantly outperform the Pi running ANNs.

How can this be?

I think I've identified reasons for the surprising results.

At least one benchmark appears to have tested the Nano in 5W power mode. I'm not 100% certain, as the author has not responded to several enquiries, but the article talks about the difficulty in finding a 4A USB supply. That suggests that the author is not entirely familiar with the Nano and its documentation.

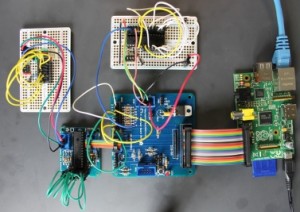

The docs makes it clear that the Nano will only run at full speed if 10 W mode is selected, and 10 W mode requires a PSU with a barrel jack rather than a USB connector. You can see the barrel jack in the picture of the Nano above.

Another common factor is that most of the benchmarks test inference with a pre-trained network rather than training speed. While many applications will use pre-trained nets, training speed still matters; some applications will need training from scratch, and others will require transfer learning.

I've done a couple of quick tests of relative speed with a 4GB Pi4 and a Nano running training tasks with the MNIST digits and fashion datasets data sets.

The Nano was in 10 W max-power mode. The Pi was cooled using the Pimoroni fan shim, and it did not get throttled by overheating.

Training times using MNIST digits

The first network uses MNIST digits data and looks like this:

model = tf.keras.models.Sequential([While training, that takes about 50 seconds per epoch on the Nano, and about 140 seconds per epoch in the Pi 4.

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

MNIST Fashion training times with a second, larger CNN layer

The second (borrowed from one of the deeplearning.ai course notebooks) uses the mnist fashion dataset and looks like this

model = tf.keras.models.Sequential([While training, that takes about 59 seconds per epoch on the Nano and about 336 seconds per epoch on the Pi.

tf.keras.layers.Conv2D(64, (3,3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

Conclusion

These figures suggest that the Nano is between 2.8 and 5.7 times faster than the Pi 4 when training.

That leaves me curious about relative performance when running pre-trained networks. I'll report when I have some more data.

This comment has been removed by the author.

ReplyDeleteThere is not mistery. All is in the price and specs. Nano good price,normal CPU quite GPU. RPi,new gen CPU faster,but a lot better optimized,no bad GPU but bad for ML. In general task, RPi is a little faster. In video and games, nano is quite faster, but 40% more expensive and bigger, quite bigger and weird shape. So for 90% of the people RPi is the right election. But, the point is, for the same money, what you can get, which is the better choice for ML. Is clear for me,RPi + Coral TPU USB acceleration beat by a long way to Jetson Nano, even TX1, just by 10£ more and still will have a smaller form factor. I think that is the way to compare them.

ReplyDeleteRPI+ coral TPU beat nano for TRAINING ?

DeleteThis article is interesting because he look to the training time

And for training of not specific vision thing, does coral cards is still soo good?

Delete