Benchmarking TF-TRT on the Raspberry Pi and Jetson Nano

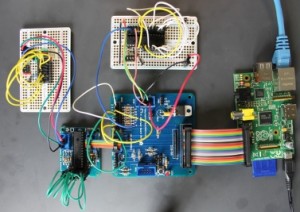

I recently posted some results from benchmarks I ran training and running TensorFlow networks on the Raspberry Pi 4 and Jetson Nano. They generated a lot of interest, but some readers questioned their relevance. They were'n interested in training networks on edge devices.

Most people expect to train on higher-power hardware and then deploy the trained networks on the Pi and Nano. If they use TensorFlow for training, they have are several choices for deployment:

- Standard TensorFlow

- TensorFlow Lite

- TF-TRT (a TensorFlow wrapper around NVIDIA's TensorRT, or TRT)

- Raw TensorRT

I've run a number of benchmarks, and the results have been much as I expected.

I did encounter one surprising result, though, which I'll talk about at the end of the post. It's a pitfall that could easily have invalidated the figures I'm about to share.

Benchmarking MobileNet V2

The results I'll report are based on running MobileNetV2 pre-trained with ImageNet data. I've adapted the code from the excellent DLology blog which covers deployment to the Nano. I've also deployed the model on the Pi using a hacked community build of TensorFlow, obtained from here. That has a wheel containing TF-TRT for python3.7, which is the default for Raspbian Buster.

(The wheel seems to have a minor bug. This weekend I'll set up a GitHub repo with the sed script I used to work around that, along with the notebooks I used to run the tests.)

The Pi 4B has 4GB of RAM, running a freshly updated version of Raspbian buster.

So here are the results you've been waiting for, expressed in seconds per image and frames per second (FPS).

Platform Software Seconds/image FPS Raspberry Pi TF 0.226 4.42 Raspberry Pi TF-TRT 0.20 5.13 Jetson Nano TF 0.082 12.2 Jetson Nano TF-TRT 0.04 25.63

According to these figures, the Nano is three to five times faster than the Pi, and TF-TRT is about twice as fast as raw TensorFlow on the Nano.

TF-TRT is only slightly faster than raw TensorFlow on the Pi. I'm not sure why this should be, but the timings are pretty consistent. At some stage I'll run some other models, but those will have to do for now.

A benchmarking pitfall

|

| jtop |

Fortunately Raffaello Bonghi's excellent jtop package saved the day. jtop is an enhanced version of top for Jetsons which shows real-time GPU and memory usage.

Looking at its output, I realised that an earlier session on the Nano was still taking up memory. Once I'd closed the session down, a re-run gave me the 25 fps which I and others had seen before.

I continue to be impressed by the Pi 4 and the Nano.

While the Nano's GPU offers significantly faster performance on Deep Learning tasks, it cost almost twice as much. Both represent excellent value for money, and your choice will depend on the requirements for your project.

Comments

Post a Comment