Getting Started with the Jetson Nano - part 4

I'm amazed at how much the Nano can do on its own, but there are times when it needs some help.

Some deep learning models are too large to train on the Nano. Others need so much data that training times on the Nano would be prohibitive.

Today's post shows you one simple solution.

In the previous tutorial, you went through five main steps to deploy the TensorFlow model:

The Keras interface to TensorFlow makes it very easy to export a trained model to a file. That file contains information about the way the model is structured, and it also contains the weights which were set as the model learned from the training data.

That's all you need to recreate a usable copy of the trained model on a different computer.

The notebook you'll run in this tutorial will make use of Laurence Moroney's open source rock paper scissors dataset.

This contains images of hands showing rock, paper or scissors gestures. The hands come in a variety of shapes, sizes and skin colours, and they are great for experimenting with image classification.

Laurence uses that dataset in the Convolutional Neural Networks with TensorFlow course on Coursera.

(I strongly recommend the course, which is very well designed and superbly taught).

In one of the exercises in the course, students save a trained model that recognises the rock, papers, scissors hands.

They create and run the model using Google's amazing Colab service.

If you haven't come across it, Colab allows users who have registered to run deep learning experiments on Google's powerful hardware free of charge. (Colab also has a great online introduction to Jupyter notebooks.)

Today's notebook will show you how to load some data, and then load the trained model from the course onto your Nano. Then you can use the model to classify images.

You don't need to go through the process of training the model - I've done it for you.

(Thanks to Laurence Moroney for permission to use the course model for this tutorial!)

There are a couple of steps you need to take before you can run the next notebook.

The first step will set up a swap file on your Nano. If you're not familiar with the term, a swap file can be used by your Nano's Ubuntu Operating System to pretend to programs that the Nano has more than 4 GB of RAM.

The TensorFlow model you'll be using with rock. paper, scissors has over 3.4 million weights, so it uses a lot of memory. The swap file will allow you to load and run the model on the Nano.

The second step will install scipy, another Python package that is required for the model to run.

Here's what you should do first:

You'll be using a script set up by the folks at JetsonHacks. You'll clone their repository from GitHub and then run the script. After that, you'll reboot the Nano to make sure that your swap file is properly set up.

1. Open a Jupyter lab window on your Nano as you did in the previous tutorial.

2. Click on the + sign to the left, just below the Edit menu to open a new Launcher.

3. Click on the Terminal icon at the bottom left hand of the right-hand pane.

A terminal window will open.

4. In the terminal window, type the following commands:

5. You should see output like this:

Cloning into 'installSwapfile'...

remote: Enumerating objects: 22, done.

remote: Counting objects: 100% (22/22), done.

remote: Compressing objects: 100% (17/17), done.

remote: Total 22 (delta 8), reused 16 (delta 5), pack-reused 0

Unpacking objects: 100% (22/22), done.

romilly@nano-02:~$ cd installSwapfile/

romilly@nano-02:~/installSwapfile$ sudo ./installSwapfile.sh -s 2

[sudo] password for romilly:

Creating Swapfile at: /mnt

Swapfile Size: 2G

Automount: Y

-rw-r--r-- 1 root root 2.0G May 20 10:02 swapfile

-rw------- 1 root root 2.0G May 20 10:02 swapfile

Setting up swapspace version 1, size = 2 GiB (2147479552 bytes)

no label, UUID=b139aeed-54d0-4cda-9ccf-962b9fefd3b8

Filename Type Size Used Priority

/mnt/swapfile file 2097148 0 -1

Modifying /etc/fstab to enable on boot

/mnt/swapfile

Swap file has been created

Reboot to make sure changes are in effect

romilly@nano-02:~/installSwapfile$

6. Reboot the Nano and check the swap file has been installed.

Type sudo reboot

Enter your password if asked to do so.

Your Nano will close down and then re-start. You'll probably see a warning in your browser saying that a connection could not be established. That's quite normal; the browser has noticed that the Nano is re-booting.

After 30 seconds or so, reload the lab web page. It should reconnect to the nano.

Once again, open the launcher and start a terminal window.

In it, type

cat /etc/fstab

You should see output like this:

The key line reads /mnt/swapfile swap swap defaults 0 0

If you can see that, the swapfile has been installed correctly.

The TensorFlow example you'll be running requires another Python package called scipy.

scipy has a lot of useful features, but it takes a while to install, and it relies on a couple of other Ubuntu packages. Install the prerequisite packages first.

Type

When asked if you want to continue with installation, say yes.

You'll see output like this:

Now you're ready to install scipy.

Type

At the end you should see this:

You've one more steup to take in order to install the saved network and the next notebook, and it is very quick.

Make sure you are in the nano directory. (If not, just enter cd ~/nano ).

Type git pull

This will load the changes from my nano repository on GitHub. These include the saved network you'll be using and the notebook you'll run.

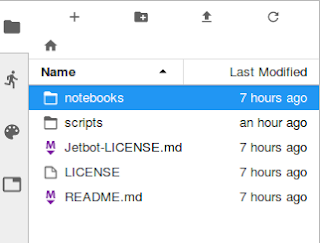

The left-hand pane of your browser window should look like this (of course, the updated times will be different):

Double-click on the notebooks folder in the left-hand pane.

The pane should now look like this:

The data folder contains a file called rps.h5 which contains the saved neural network.

The notebooks folder contains three notebooks; you'll use the one called loading.ipynb.

Double-click on loading.ipynb

Your screen should look like this:

From the Run menu, select Run All Cells.

You'll see your notebook load the data, load the network and print out its structure. Then you'll see it classify an image and display the image it processed. Here's a video

Congratulations! Your Nano is now that little bit smarter :)

In the next post, you'll see transfer learning - a very valuable technique that allows you to take a pre-trained network and fine-tune it to perform a specialised task.

If you have questions, comments or suggestions, come and visit the (unofficial) Jetson Nano group on FaceBook.

A frustrating problem...

Some deep learning models are too large to train on the Nano. Others need so much data that training times on the Nano would be prohibitive.

Today's post shows you one simple solution.

... and a simple solution

In the previous tutorial, you went through five main steps to deploy the TensorFlow model:

- Get training data

- Define the model

- Train the model

- Test the model

- Use the model to classify unseen data

Saving and Loading Keras Models

The Keras interface to TensorFlow makes it very easy to export a trained model to a file. That file contains information about the way the model is structured, and it also contains the weights which were set as the model learned from the training data.

That's all you need to recreate a usable copy of the trained model on a different computer.

Rock, Paper, Scissors

The notebook you'll run in this tutorial will make use of Laurence Moroney's open source rock paper scissors dataset.

This contains images of hands showing rock, paper or scissors gestures. The hands come in a variety of shapes, sizes and skin colours, and they are great for experimenting with image classification.

Laurence uses that dataset in the Convolutional Neural Networks with TensorFlow course on Coursera.

(I strongly recommend the course, which is very well designed and superbly taught).

In one of the exercises in the course, students save a trained model that recognises the rock, papers, scissors hands.

They create and run the model using Google's amazing Colab service.

If you haven't come across it, Colab allows users who have registered to run deep learning experiments on Google's powerful hardware free of charge. (Colab also has a great online introduction to Jupyter notebooks.)

Today's notebook will show you how to load some data, and then load the trained model from the course onto your Nano. Then you can use the model to classify images.

You don't need to go through the process of training the model - I've done it for you.

(Thanks to Laurence Moroney for permission to use the course model for this tutorial!)

Before you run the notebook

There are a couple of steps you need to take before you can run the next notebook.

The first step will set up a swap file on your Nano. If you're not familiar with the term, a swap file can be used by your Nano's Ubuntu Operating System to pretend to programs that the Nano has more than 4 GB of RAM.

The TensorFlow model you'll be using with rock. paper, scissors has over 3.4 million weights, so it uses a lot of memory. The swap file will allow you to load and run the model on the Nano.

The second step will install scipy, another Python package that is required for the model to run.

Here's what you should do first:

Setting up a swap file

You'll be using a script set up by the folks at JetsonHacks. You'll clone their repository from GitHub and then run the script. After that, you'll reboot the Nano to make sure that your swap file is properly set up.

1. Open a Jupyter lab window on your Nano as you did in the previous tutorial.

2. Click on the + sign to the left, just below the Edit menu to open a new Launcher.

3. Click on the Terminal icon at the bottom left hand of the right-hand pane.

A terminal window will open.

cd ~

git clone https://github.com/JetsonHacksNano/installSwapfile.git

cd installSwapfile/

sudo ./installSwapfile.sh -s 2

Enter your password when asked to do so.5. You should see output like this:

Cloning into 'installSwapfile'...

remote: Enumerating objects: 22, done.

remote: Counting objects: 100% (22/22), done.

remote: Compressing objects: 100% (17/17), done.

remote: Total 22 (delta 8), reused 16 (delta 5), pack-reused 0

Unpacking objects: 100% (22/22), done.

romilly@nano-02:~$ cd installSwapfile/

romilly@nano-02:~/installSwapfile$ sudo ./installSwapfile.sh -s 2

[sudo] password for romilly:

Creating Swapfile at: /mnt

Swapfile Size: 2G

Automount: Y

-rw-r--r-- 1 root root 2.0G May 20 10:02 swapfile

-rw------- 1 root root 2.0G May 20 10:02 swapfile

Setting up swapspace version 1, size = 2 GiB (2147479552 bytes)

no label, UUID=b139aeed-54d0-4cda-9ccf-962b9fefd3b8

Filename Type Size Used Priority

/mnt/swapfile file 2097148 0 -1

Modifying /etc/fstab to enable on boot

/mnt/swapfile

Swap file has been created

Reboot to make sure changes are in effect

romilly@nano-02:~/installSwapfile$

6. Reboot the Nano and check the swap file has been installed.

Type sudo reboot

Enter your password if asked to do so.

Your Nano will close down and then re-start. You'll probably see a warning in your browser saying that a connection could not be established. That's quite normal; the browser has noticed that the Nano is re-booting.

After 30 seconds or so, reload the lab web page. It should reconnect to the nano.

Once again, open the launcher and start a terminal window.

In it, type

cat /etc/fstab

You should see output like this:

The key line reads /mnt/swapfile swap swap defaults 0 0

If you can see that, the swapfile has been installed correctly.

Install scipy

The TensorFlow example you'll be running requires another Python package called scipy.

scipy has a lot of useful features, but it takes a while to install, and it relies on a couple of other Ubuntu packages. Install the prerequisite packages first.

Type

sudo apt-get install build-essential gfortran libatlas-base-dev

And enter your password when it's requested.

When asked if you want to continue with installation, say yes.

You'll see output like this:

Now you're ready to install scipy.

Type

sudo -H pip3 install scipy

Once you've entered the line above, leave the installation running. It took about 30 minutes on my Nano.

At the end you should see this:

You've one more steup to take in order to install the saved network and the next notebook, and it is very quick.

Make sure you are in the nano directory. (If not, just enter cd ~/nano ).

Type git pull

This will load the changes from my nano repository on GitHub. These include the saved network you'll be using and the notebook you'll run.

The left-hand pane of your browser window should look like this (of course, the updated times will be different):

Double-click on the notebooks folder in the left-hand pane.

The pane should now look like this:

The data folder contains a file called rps.h5 which contains the saved neural network.

The notebooks folder contains three notebooks; you'll use the one called loading.ipynb.

Double-click on loading.ipynb

Your screen should look like this:

From the Run menu, select Run All Cells.

See the Notebook running

You'll see your notebook load the data, load the network and print out its structure. Then you'll see it classify an image and display the image it processed. Here's a video

Congratulations! Your Nano is now that little bit smarter :)

In the next post, you'll see transfer learning - a very valuable technique that allows you to take a pre-trained network and fine-tune it to perform a specialised task.

If you have questions, comments or suggestions, come and visit the (unofficial) Jetson Nano group on FaceBook.

Comments

Post a Comment