I2C and SPI - what would you like to know?

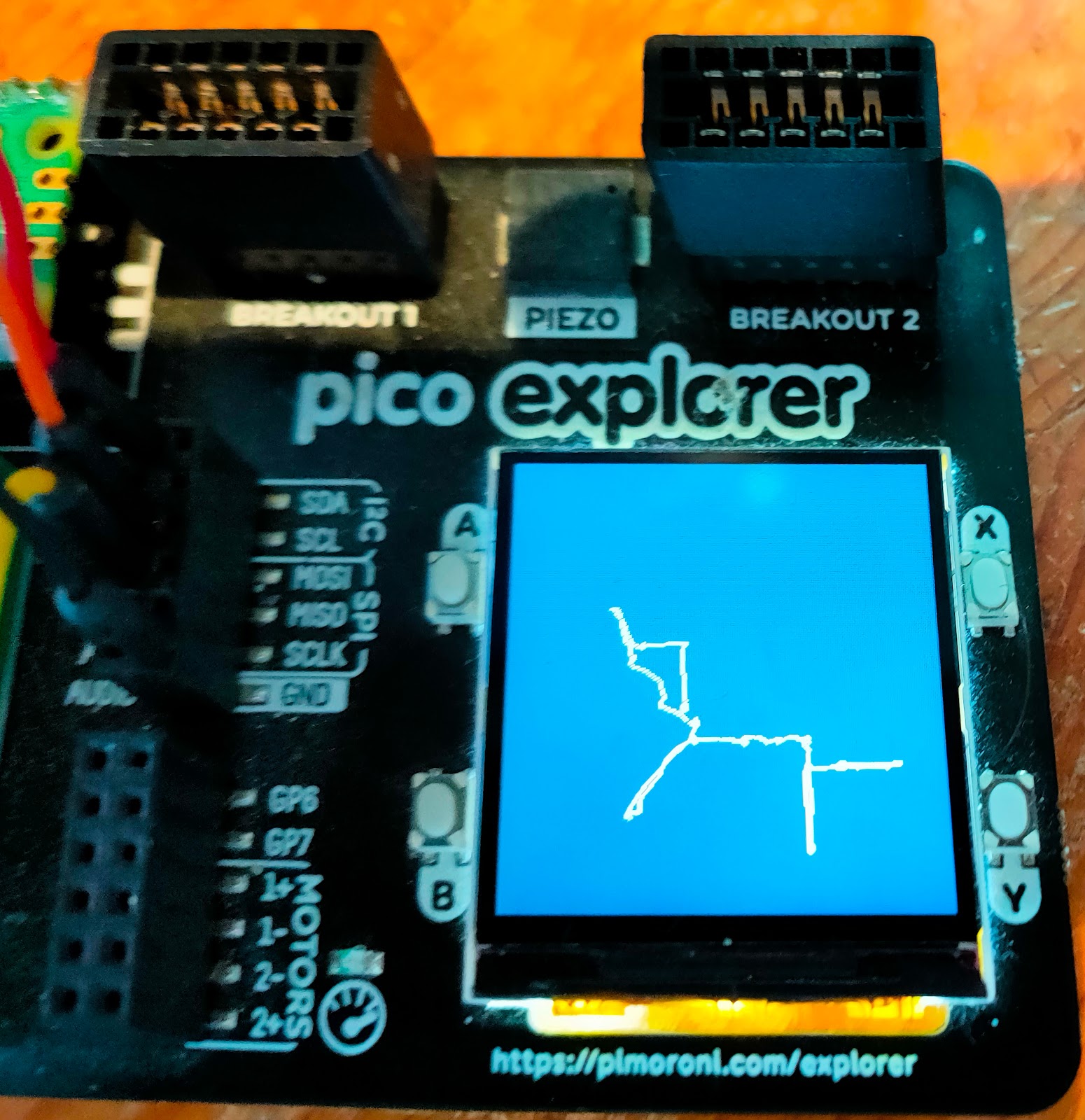

You can find more about I2C, SPI, Raspberry Pi and Pico on the free RAREBlog stack . I'll be posting all new RAREblog content on that stack. I will keep old content here, since some of these pages still get inbound traffic from links. My goal for the next few months is to post a full article each week, but I'm also sharing work in progress as substack notes. You can follow those without signing up for the full substack experience. In the current project, I'm getting a monochrome display to show how the current through an LED varies with voltage. I'm nearly there: I expect to complete the project in the next day or so. Here's the display; the video below shows the breadboard with the Pico, the LED and additional circuitry. Here's the latest plot; it's generated on my workstation from a csv file, but I should soon have a version on the OLED display! Remember to follow the new version of RAREblog if you want to follow my progress!